VMware VEBA 0.5.0 stops working

I my lab, the VMware VEBA 0.5.0 fling stopped working, this has happed 2 times now.

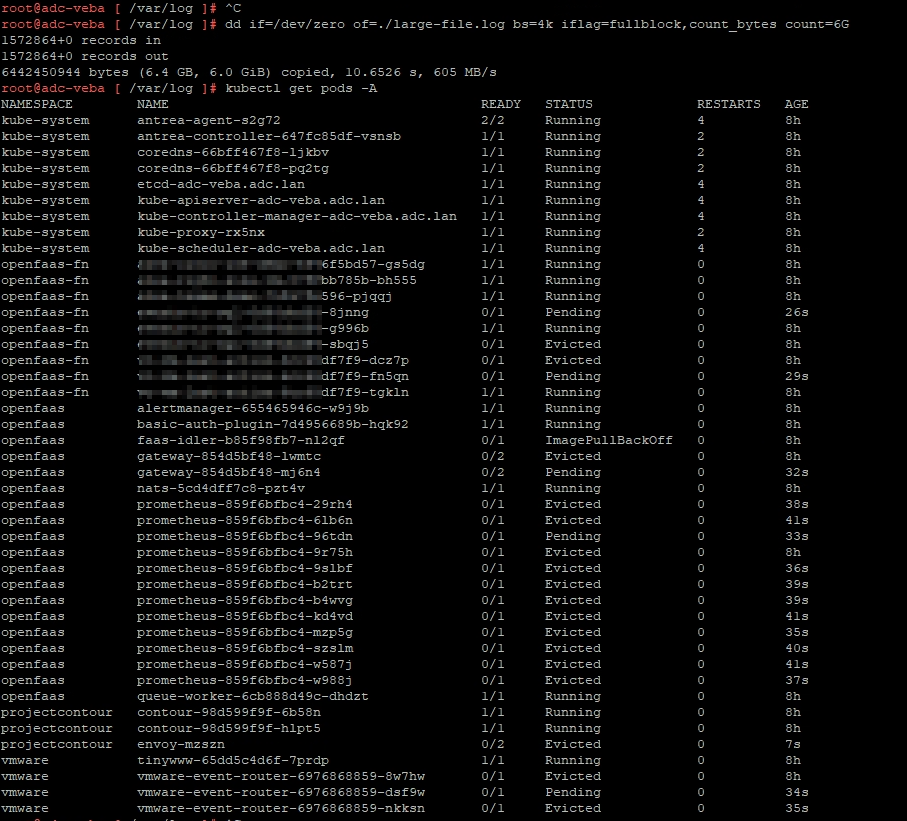

When logging in to the VEBA appliance you can see many pods that are “Evicted”, I had around 12000 “Evicted” pods after 2-3 days, the screenshot below are from the troubleshooting i did after fixing the problem, and reproduced the problem, for a bug report.

After some troubleshooting i found that the mount / was 90 percent full.

I was looking for the reason for this and found that the file “/var/log/conntrackd-stats.log” is growing and do not get truncated.

solution was to delete the “/var/log/conntrackd-stats.log” and all “Evicted” pods and reboot, after the reboot, it might also be necessary to delete newly “Evicted” pods”. I did also powered off the appliance for 10 hours, don’t know if that needed, but it might because of the dockerhub rate limit.

To delete all “Evicted” i used this command:

kubectl get po --all-namespaces | awk '{if ($4 == "Evicted") system ("kubectl -n " $1 " delete pods " $2 " --grace-period=0 " " --force ")}'

I have filed a bug report on github repository, for them to fix this.

Note: I also see the error in the docker pull on the Openfaas-idle, that William Lam, has a bug report on.